Updated: January 14, 2008

Recently, I have noticed a slightly annoying trend of articles popping all over the web, pretending to portray an accurate picture of the gloom reality in the world of software security - mainly by counting the various bugs that this or that application had.

This article is intended to dispel some of the Fear, Uncertainty and Doubt (FUD) - and hopefully put things in the right perspective. In order to avoid any specific product bashing, I will deliberately refrain from naming products and running comparisons. But I will definitely challenge some of the loose standards that are being so freely used as software security benchmarks.

Motive

I'd like to believe that anyone taking a stand on a subject has a motive - myself included. In this particular case, money - or lack thereof. When a security vendor issues an article telling how this or that application is vulnerable, or when a security testing company uses its own tests to promote vulnerabilities and sell their services, you should not always accept the results at face value. It's not that these companies do not have a reasonable moral interest in keeping us all safe - it's the how and how much. For example, if an anti-virus company told you: all's well, no need to use an anti-virus - would you even consider buying their product? But if they issue an advisory telling of 10,000 new dreadful programs that "hackers" release every day, you just might be tempted to succumb to fear and open your wallet.

Don't get me wrong. 10,000 new dreadful programs are released every day. But that does not mean that we should all hide in nuclear shelters. Which brings me back to the topic of this article. Counting security problems as if they were potatoes.

For those not savvy enough in computers to relate to what I'm trying to say, ask your insurance agent if you need a policy. Conclusion: Security claims can only be treated with little or no bias if there is no financial gain at hand.

Statistics

Sorry to say, but from the various benchmarking results I've read here and there, be it security or plain ole performance of a program, it seems that most people know squat about statistics. Most comparison charts are very simple: you get pass or fail, or you get percentage of difference between two or more values. But almost always, interactions are disregarded, weights and distributions are disregarded, linearity and normality of the tested environments / systems are disregarded.

Which brings me to the topic. In order to criticize security products, many people use the simple count of security vulnerabilities listed somewhere online as the one and only indicator to the quality of product at hand. This is notoriously true for web browsers, especially when relevant vendors launch their propaganda campaigns justifying their programs.

Properly assess the security level of an application

Here, I'll assume we handle applications that have Internet access and can contact remote machines, one way or another.

Vulnerability type

Only two options here - local and remote. Local gets the grade of 1, remote gets a round 10. Why? Well, if your system can be accessed by an untrustworthy local user, the tampered IM buddy list or deleted web browser bookmarks should be the least of your problems. Remote access is a completely different story. For one, while a local vulnerability means the computer can be accessed by very few people (tens, hundreds at most), a remotely triggerable vulnerability means that potentially any machine on the web could, under the right circumstances, have access to the vulnerable system.

To sum it up, 10 local vulnerabilities might scale up to one remote, and even then, the remote one is far far more important.

Severity

Vulnerabilities are usually divided into five categories - from critical to minor. These usually refer to the ability of the remotely triggered exploit to cause damage to the exposed system. Critical refers to system wide changes, including the kernel. Minor is usually restricted to the application itself or the interruption in the service it provides.

System-capable vulnerabilities get the severity of 10; minor get 1. Any which number of choices can be scaled in between, with potential data theft high up. In other words, 10 minor vulnerabilities might scale up to one critical.

Permissions

Severity of a vulnerability will be shaped by the ability of a remote exploit to change the system, which brings us to permissions. In fact, two types of permissions - those of the system and those of the vulnerable application or service.

System permissions are those that might prevent the exploit from taking place (at least partially) if the exploit successfully breaks outside the application or service environment. For example, Windows Internet Explorer is a fully system-capable application. However, under a limited account (LUA), an exploit that might break outside Internet Explorer's envelope might not be able to harm the system to its full extent because of the system restrictions, regardless of what the application might be capable of.

Application / service permissions pertain to the vulnerable component, regardless of what the system is capable of. A good example is eMule, a P2P sharing program for Windows. As a security measure, eMule can be run as an unprivileged application inside Windows (even under a full administrator account). Thus, were an exploit to be successfully executed, it would still be restricted by the application's limitations.

Indeed, the self-employed sandboxing method is one of the most important security features that applications could have. This also implies modularity, because fully self-contained applications can exist without being tied to the system and its inherent vulnerabilities. Sandboxed applications also have a much smaller chance of suffering from a privilege escalation vulnerabilities, the type where an exploit gains access to higher permission that those granted by the system.

Here, the UNIX style of grading seems like the best option - with a few modifications to fit the slightly different Windows world. A fully non-restricted application / services running with administrator / root privileges gets the grade of 10. Next come sandboxed applications with administrator privileges on an administrator account, with 7. Then, sandboxed applications with limited privileges on an administrator account, with 4. Then, sandboxed applications with limited privileges on a limited account, with 1. An application with permissions 1 or 4 will most likely never be culprits to a severity 10 event.

Status

I think that any patched vulnerability, regardless of its type gets a weight of 1. And any unpatched one gets a 10. As simple as that.

Time to patch

An extremely important factor. A critical, unpatched vulnerability is gazillion times more severe than a critical vulnerability that was patched within hours. All that remains is to setup the time window. I believe that the following scheme should be used: patch within 24 hours - grade 1, 2 days - grade 2, 3 days - grade 3, a week - grade 4, two weeks - grade 5, a month - grade 6, and so forth. Of course, an unpatched vulnerability automatically gets a 10.

Quantity

Of course, we must account for these, but merely as multipliers for the above. But the question is, what should be counted? And how? This is tricky, since many vendors played this card oh-so many times before, bunching several vulnerabilities into one, manipulating the numbers. My suggestion is to count the number of files on the machine that need to be upgraded. If a certain vulnerability requires 20 core system files to be patched, then such a vulnerability accounts for 20 system changes. Tracing the right hierarchy between the files might be tricky, but we can assume that even a single unpatched file might cause the entire pyramid to collapse.

This may not always be possible, because certain operating systems and applications are closed-source and tracing the exact changelogs for each vulnerability might be difficult. But since my grading system introduces enough weight in all other categories, the vendor advisories should be enough.

Lastly, patched and unpatched vulnerabilities should be counted separately, with the patched count being as high as possible a good thing. While the high number of patches may hint at a flawed initial design, it's still a testimony to the software team doing their best to keep the program up to date and safe. Likewise, as few unpatched vulnerability as possible seems like the right idea.

Grading formula

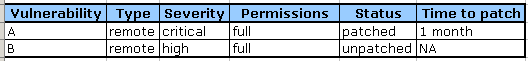

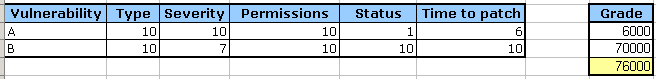

A simple product of all the factors mentioned, per vulnerability, with the total sum being the application / service vulnerability score, the lower the better. So, let's run a little simulation. Application 1:

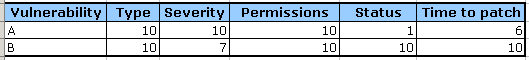

And the numbers:

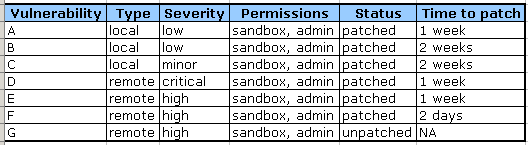

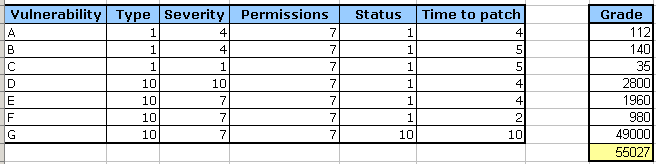

Application 2:

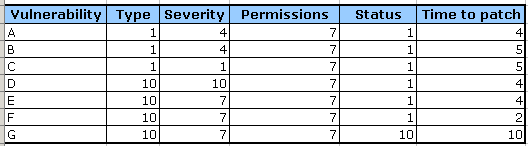

And the numbers:

What would you choose?

Today, 99% of people on the web would point to application 1 and say: "it's the safest!" But let's see what numbers tell us.

Our formula:

Our formula is a simple product of all factors combined; the weights are already included in each parameter:

For application 1, we get:

And for its counterpart, we get:

Amazing?

I believe these results emphasize the way I think and believe security ratings should be given to applications. The mere count of potatoes does security a great injustice. Of course, if you find my logic flawed and do not agree with my metrics, then the results are meaningless for you. But if you do agree ...

Sum is a nice way of keeping a tab on things, but it diminishes the power of interaction - the product of several factors combined. What we can see is unpatched vulnerabilities are an order of magnitude more severe than patched ones. Well, it stands to logic with the grading system I invented. But we can also see that the permissions play a major part, as well as the time to patch.

Local vulnerabilities are meaningless in the great scope of events. And so is the NUMBER of vulnerabilities. A hundred vulnerabilities patched within a day barely scale up to one unpatched vulnerabilities.

Conclusions

If you can enjoy an environment running with reduced privileges, you'll end up gaining far more than you can imagine, You'll automatically reduce the permissions and severity ratings of possible exploits, leaving you careless about their status or time to patch. And if you run as an admin, a quick vendor will go a great way to alleviating your worries. Last but not the least, properly sandboxed applications under an administrator account also go a long way in making things safer.

Security is not potatoes. More than a single dimension of analysis is required. In fact, security is the matter of quality - not quantity. Next time someone tries to scare you with numbers, think through what the numbers actually mean.