Updated: July 2, 2025

Back in 2017, Canonical decided to stop the development of its homegrown Unity desktop. Instead, they chose Gnome 3 as the next platform, and even since, Ubuntu simply hasn't been as good as it was before. I often wonder what would have happened if Canonical had chosen, well, pretty much anything else.

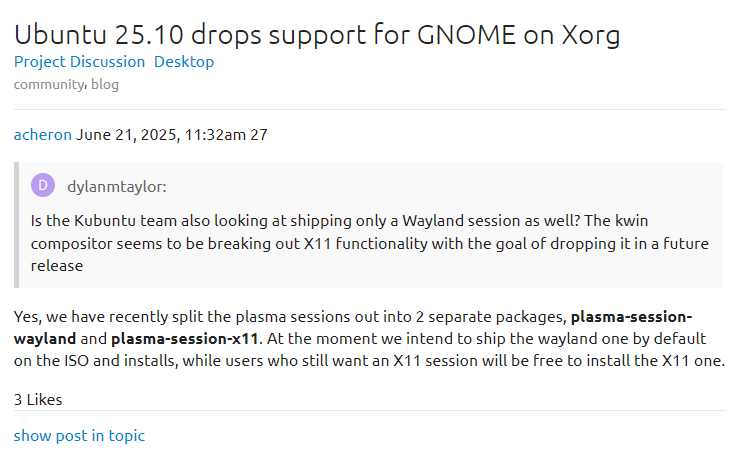

Recent news in the Linux world around the forced deprecation of the X11 session in upcoming Gnome and, consequently, Ubuntu releases prompted me to write this article. I feel sad and alarmed, and I want to take a look at the Linux tech landscape, to see where we are, why we are where we are, and if perhaps the future holds anything good and bright and meaningful for the Linux folks. Let's chat.

Canonical tech was/is better, but (purposefully) shunned

If I look at what Canonical did in the past 15-20 years or so, whether you like it or not, they did make the Linux home desktop into something beyond a nerdy abomination for the basement dwellers. Ubuntu remains the most popular distro, by far, and what it does affects the rest of the ecosystem.

Alas, rather than harness this power of influence, Canonical has and is consistently playing into the hands of its detractors, perhaps even its rivals. On paper, Gnome is Gnome, but in reality, you cannot miss the Red Hat connection. And believe it or not, in the corporate world, Canonical and Red Hat are competitors.

Now, Canonical has tried to innovate a lot. Tried and ... failed or gave up:

- Upstart as the new boot mechanism to replace init; 'twas good and worked well. Better than systemd. But "we" use the pointless systemd now. Those old enough to remember, the original "argument" was how init serializes its boot, it's "messy", and we need something to parallelize it, to make things faster. That was in the era of who boots faster. Well, go into my Linux section and read my 2010-2012 reviews. Boot times of 8 seconds. Hell, even today, MX Linux, with init, boots much, much faster than any, ANY systemd-powered distro on my IdeaPad laptop.

- Mir was/is better than Wayland. But it was hated, and Canonical folded. As my recent endeavors show, first the overall 2024 results and the very recent Plasma 6.4 test, where Wayland fails even the most basic font clarity/blurriness test and raw performance on a five-year-old laptop with integrated AMD graphics, Wayland remains a flop. Even the raw performance on a completely idle desktop are in X11's favor, as my two article on the topic show: my GPU benchmark and the kernel/CPU benchmark pieces. There's this 15-year-old perpetual beta that will be FORCED onto your desktop soon, because that's how morale improves.

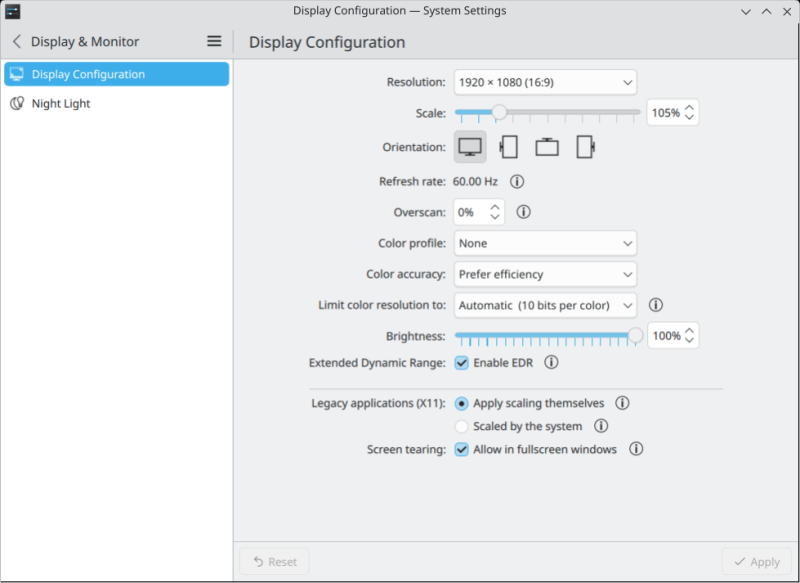

This is Plasma 6.4, Wayland, blurred fonts, out of the box.

- Unity was/is better than Gnome 3. Still is. Performance, HUD, integrated top panel menu. Ubuntu suffers from its decision to this day, and even the most recent Ubuntu releases do not have a Show desktop button. Yup. That's "modern" progress for you.

- Ubuntu Software Center, the old one from Trusty, to this day, remains the only real attempt, in the entire Linux world, EVER, to have a proper store, with proprietary software and prices and all that. As a community, so to speak, we had more 10 years ago than we have now. That old tool was better than anything else, before and after. Sadness ensues.

- Snaps offer more functionality than Flatpaks, as they run on more architectures, and are designed for numerous non-desktop use cases. And yet, they are deeply hated, because the Snap Store itself is closed source. Now, don't get me wrong, both solutions suffer massively, and have various problems. But from a purely technical perspective, snaps offer more functionality. The Snap Store is perhaps a weak link, but it's also gated by Canonical. If you read my openSUSE Tumbleweed review or my Zorin OS 17.3 review, you will see that having direct access to community sources with unverified contributions can be quite confusing, if not outright dangerous. We shall discuss this a bit more farther below.

Now, let's also not forget the following:

- Ubuntu was the first proper LTS for desktop use, and still remains almost the only one. Sure, for years and years, I experimented and tried using the lovely CentOS as my "almost" perfect desktop, but CentOS, as it was, is no more. Very sad. Indeed.

- Ubuntu lets you use its pro package, for free, and this gives you 10-12 years of free updates.

- Ubuntu still has the most accessible and widest range of programs (for home use), drivers and whatnot.

- Ubuntu fonts are superior to all other free Linux fonts.

- Don't forget the thousands upon thousands of Ubuntu CDs shipped worldwide, over the years, for free, because once upon a time, it was very difficult for people to use bandwidth to download ISO images, and Canonical stepped in.

- And Ubuntu's early success and massive growth prompted Steam to open up to Linux, and under the hood, at least the last time I checked, the Linux Steam client still runs on Ubuntu 12.04 libraries (albeit heavily modified).

So you may ask why then Ubuntu isn't spearheading but rather timidly following (bad choices)? That's a good question. I really don't have an instant answer. Ubuntu, or rather, Canonical is willing letting all its desktop advantages be sidelined or sabotaged. This is quite interesting, tragic, and needs a somewhat wider perspective.

Everyone thinks they can be the next Google

In the Linux world, you either copy Google or you copy Apple, and developers go: if I follow these steps, I'll succeed. Except, that's not how it works. Not at all. There's a reason why venture capitalists spread-bet their money on dozens if not hundreds of companies in parallel, expecting 99% of them to fail just so they can be mega-profitable from the one "unicorn". Because even professional investors cannot really know what the future will bring, or the unique blend of circumstances that will lead to a very specific success story.

But these critical details are lost upon so many people. Thus, there's this trend whereby distros try to be like the giants. Only nerd style.

The problem is, this is (mostly) software developers making decisions. And software developers simply do not understand product. Nothing wrong with it. I repeat, nothing wrong with it. Perfectly normal. The brain of someone writing code is orthogonal to someone who builds philosophical ideas and concepts. Developers should write code all right, but they should never ever design products. Simple. Products are philosophy. Code is not.

Indeed, what we have in Linux is ... flawed Chromebook fever.

Chromebooks account for about 10% total desktop share out there. You could say that Chromebooks are in fact the most successful Linux implementation (on the desktop)! Furthermore, their model is also quite different from the classic desktop. It boils down to: immutable system with writable apps. Sounds cool, and it is, for the limited usage model that these systems provide.

However, the fine distinction that made Google successful (MONEY!!!) eludes the Linux folks. The benevolent developers and the nerds get stuck on terminology like "atomic" and "updates", and we end up with the Linux desktop slowly being nudged, shoved and coerced toward the "dumb" model. Wayland is a good example, because it simply ignores tons of legitimate serious use cases. But if the goal is to have a simple consumer-oriented computer, like Chromebook, then sure, who cares, right.

Except ... if that were the ONLY factor in the equation, sure. Only, it's not. So we only have the negative trade off, and there ain't no positive one. A cascade of bad choices. If you look at the UI/ergonomic choices, Google's products aren't really user-friendly or pretty. And there's a whole graveyard of dead Google products, getting bigger and bigger.

Now, when I extrapolate over the past decade in the Linux world, I see a slow decline toward irrelevance. Software obfuscation, inefficient usage models. I see one franken-Chromebook being assembled, pro-am style. Apparently, the logic is, Linux ought to copy the "success" of Chromebooks with "atomic" builds, pseudo-shiny stores, first-world infinite-bandwidth downloads of (buggily) containerized apps, limited system functionality, plus "simple" interfaces. That ought to work, no?

Hence the explosion of atomic distros, hence the dumbing down of interfaces, hence the pseudo-touch approach, more and more and more, hence the anti-ergonomic choices. This is also why, casually, if you open a package manager on a typical modern distro, you will have Flatpaks or snaps (or both) preselected and offered to the end user. This may or may not include unverified packages from unknown developers. Google Play works, so if we do a-la Google Play, it ought to work right? Yes, yes?

(Community) store model

For years now, Linux folks have tried to solve the Linux application conundrum. In a nutshell, most professional and paid software is available on Windows (and to some extent Mac). In Linux, you can't have these vital programs because:

- Most distros have licensing restriction, so they can't ship proprietary code.

- Most distros have no mechanisms to handle paid software, so they can't offer paid software.

- There are too many distros, too many package formats in Linux, and it makes no sense for companies to offer and support their software over 500+ different hardware-software permutations. This is why most programs comes in either rpm or deb format, and are usually only offered for Red Hat or Ubuntu.

The latest attempt to fix this is by creating distro-agnostic software. Package once, use everywhere.

We have three attempts to resolve this: AppImage, Flatpak, snaps. The first are the closest to the Windows exe click-to-install & be-done-with-it approach. Flatpaks are primarily offered through community "stores", with Flathub being the most prominent (but not the only one). Snaps are primarily offered through Canonical's Snap Store, with Canonical in charge.

So far so good. But the new would-be store model hasn't fixed the problem. It simply moved it elsewhere!

Now, I have nothing against Flatpaks or snaps. Nothing at all, per se. I actually use some, when required, and when their usage warrants the end goals. I do that happily. But:

- An online store without paid professional software doesn't resolve the availability problem. The big Linux desktop issue has always, always been software (programs, applications), not the frontend that serves it. Shifting the same set of programs from the distro repos to an online source called Whatever Store doesn't make any real difference to the end user.

- An online store that isn't protected as Fort Knox will become a morass. Even Google and Apple have to constantly battle thousands upon thousands of fake and malicious programs every day. They have entire teams working on this. They spend fat millions - and they can, because they earn billions. This makes the Snap Store somewhat more robust, as there's a clear ownership/accountability link, but as soon you step away from direct ownership of software into community packaging, the model becomes rather hard and difficult to sustain.

- In general, community stuff and professional, paid stuff rarely coexist peacefully together.

- Furthermore, to make an online store really work, there need to be financial and legal frameworks in place that cover 200+ worldwide territories. Hell, even the giants don't offer everything everywhere, because stuff like VAT and copyright laws are extremely complex, nuanced, draconian, and carry heavy penalties if not done correctly. Add DRM in (I hate it, but hey), add payment methods, and it becomes even more complicated.

Without all of these as smooth as a butter, it's almost impossible to have a real working store. And, most importantly, the foundations need to be in place FIRST. The frontend is the very LAST piece of the puzzle.

But in Linux, we ONLY have the frontend concept really:

- Online stores are there, but they are de-facto 99% community content.

- You get a somewhat store-like "shopping experience" but you get unverified content.

Unverified content is a new concept that does not exist in the distro packaging world, as distro maintainers are in charge of putting stuff into the archives. Even here, there can be problems as the xz utils fiasco highlights. Here, by the way, as it happens, Ubuntu was one of the few distros that did NOT include the infected version in the repos. We shall talk about unverified software a bit more soon.

So, incomplete "shopping" experience ...

This happens in every aspect of the Linux desktop usage. There are 100+ media players, but none offers a proper, complete service to the end user. There are 100 different frontends to apt, dnf, zypper, or whatnot, but they all lack that critical product approach. Ubuntu Software Center from 2014, still the peak.

This slim, incomplete frontend concept is slowly driving the ruination of the desktop by imposing immature choices that have no real backing when it comes to execution. To briefly illustrate my point, look at my recent bout of distro testing, from openSUSE via Fedora to Zorin and KDE neon. Inside the relevant distro package managers, you are offered Flatpaks by default. Specifically, most distros offer Flatpaks from Flathub. There are three reasons as to why:

- Reactionary choice against Canonical mandating snaps being preselected in Ubuntu flavors.

- Belief that Flatpaks will solve the application availability problem in the Linux space.

- Wrong approach to desktop containerization a-la Chromebooks.

Now, the thing is:

- Flatpaks, by and large, offer more or less the same content as the distro archives. This is just re-formatting.

- Some Flatpaks are officially packaged by their upstream owners, and this is amazing. Alas, many are not.

In a way, this new packaging mechanism does "solve" a problem. But that problem, of availability of software, is only a tiny tiny sliver of the bigger, unresolved picture. Even so, alongside the few advantages, you get a whole gamut of new problems:

- Distros now offer content from third-party resources. Sure, you could always add community repos in openSUSE, PPAs in Ubuntu, or configure stuff like RPM Fusion or EPEL for Fedora or CentOS. But this has always been a deliberate choice by the end user. The preselection bypasses the distro safeguards.

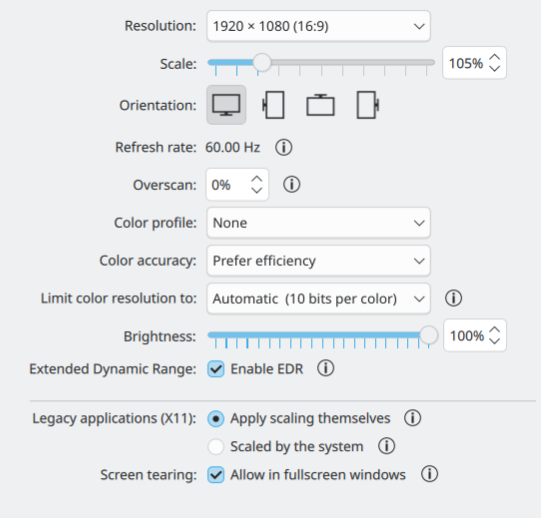

- A lot of the programs are packaged by community people, who have no direct affiliation with the upstream owners of said code. This can be great, but it can also be tricky. For example, say you search for Steam and see multiple entries, including unverified packages built by who knows who. Even if 100% benevolent and legit, I don't think stores ought to offer anything that does not come straight from their actual owners. BTW, the SAME problem exists on Android and iOS, too. Hence, most software is useless, untrustworthy, and you need to very carefully comb through the stores. Now, Linux wise, see the screenshot below, and tell me if you understand what you see, and what you ought to click:

What about the Snap Store, you say? What about snaps being preselected in Ubuntu, Kubuntu, etc? Well, there are some small differences here. Since Canonical owns the Snap Store, you have "someone to blame" for what you see. Technically, yes, the Snap Store may also offer community-packaged programs rather than verified upstream content. And there have been cases of maliciously packaged snaps. Yup, the same kind of problem that affects Google Play, Microsoft Store, Apple Store, and any other store out there, any place where people are allowed to upload their own code.

From my experience, due to how the Snap Store works, you are far less likely to see Steam or Chrome or similar shown as a result unless packaged by their verified owners. But the problem still exists, and outside of the popular names and verified snaps, you don't really have any guarantee that the program you want to use is about as good as it purports. I'm gonna repeat myself. Yup, the same kind of problem that affects Google Play, Microsoft Store, Apple Store, and any other store out there, any place where people are allowed to upload their own code.

In a nutshell, the problems are massive.

But the Linux developers are stuck on the "it worked for Google" sentence. So yeah. We're slowly undergoing the slow, sad conditioning of users to Chromebookify their experience. The Linux interpretation. Only, this is NOT going to win the hearts and minds of the common people. Neither the nerds nor the normies.

That's not how it works.

- The new model will alienate skilled users who seek trust and stability.

- The new model cannot attract normies because the Linux stores still lack 90-95% of what makes stores popular, successful or sustainable (and profitable).

- Yes, the normies need stable, mature foundations. And these cost money. A lot. Nerds always forget the crucial element in Google's "Linux" success. Billions upon billions of dollars. MONEY!!!

The end result is, the Linux desktop is "embracing" half-baked "modern" solutions, but you don't get anything in return. You won't get a Google or an Apple experience in return. Nope, you will only get a half-broken setup of unfinished projects, because 1) software people don't understand product 2) Linux is notorious for never finishing anything 3) high-quality products are extremely expensive, and money needs to come from somewhere, it sure can't be made from the good will of hard-working volunteer contributors.

So that's what we have. A slow, self-imposed, self-destructive rumble toward oblivion.

How to do it right

Not all is lost. There is hope. Massive hope.

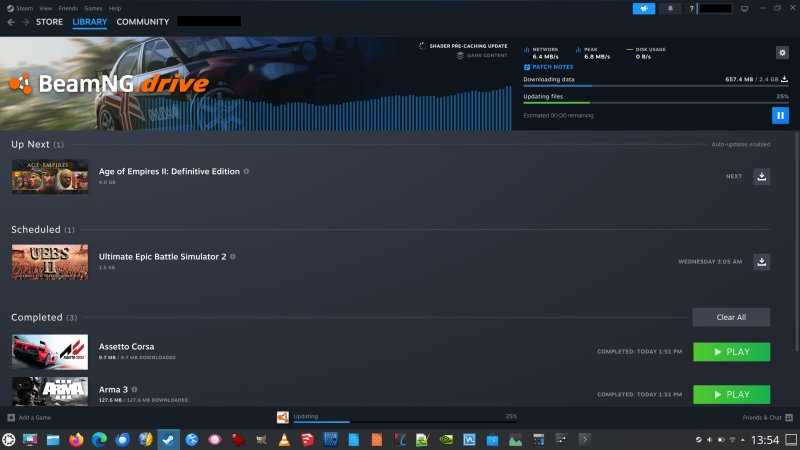

Do you know which entity is not trying to limit and cripple Linux? Valve! Steam! Not only do they support whatever is offered natively, they actually go out of their way to enable EXTRA support for Window games on top of Linux through the fabulous Proton compatibility layer. Not only do they not deprecate anything, they make the impossible possible!

And every game is sacred! Steam makes sure that if it works for you once, it keeps working forever. The whole gamut. They are not introducing some new pseudo-tech and say, well, from tomorrow, this new thing is the new thing, and some 20-30% of games will stop working.

This is why, whenever I see a "proposal" to remove 32-bit library support in Linux, I shudder. Most games are 32-bit. Most programs are 32-bit. To even suggest these should be removed implies a misunderstanding of how the world works, gaming, enterprise and consumer space.

Steam is essentially 32-bit, and regardless of the client itself, most games, especially most Windows games are 32-bit. And Steam are not saying, hey, let's kill the old legacy stuff. No. They understand their market, they understand the importance of backward compatibility.

Then, let's not forget, Steam is a COMPLETE STORE SOLUTION! Probably the most successful one in the entire world!

Tons of content, check. Community content, check - which still carries the same perils as any, but it's there if you want it. They have everything: free games, paid games, DLC, you name it. You have integrated chat, you have the workshop, anything you can imagine for an end-to-end experience, it's all there.

A complete, perfect experience for gamers.

Valve, in a way, has made the Linux a hundred times better and more accessible than it ever was. Exactly as I wrote in my 2009 article on how to make Linux more successful and grab a meaningful desktop share. So yes, it will be Steam that will make Linux happen, though games, just as I said. Please take a look at what I penned down about 16 years ago. Do it.

Also, if you're wondering, do you know what desktop SteamOS uses behind the scenes? Arch Linux WITH Plasma! This is the hottest commodity in the Linux world, if you think about it. And with suggested four million Steam Deck units sold in the past two years, and selling like hot cakes, this may soon become the de-facto most popular Linux distro of them all! If you're interested about the internals of this system, please do some digging. You will be surprised by what you find. And yes, you will find X11 and Wayland in the equation, too! But effectively, the latest SteamOS 3.7 runs X in the background. Because.

As I always said, I have nothing against Wayland. If anything, if there's one company that may yet "fix" Wayland, it will be Valve. It won't be general purpose per se, but they will make it work for the gaming platform, a Linux console, and will tightly integrate all the bits and pieces.

Food for thought, dear people. Food for thought.

Back to Canonical

For reasons beyond my knowledge or willingness to speculate, Canonical has given up way too many times, and every time, this leads to a slightly less good Linux desktop landscape. Every single time. Even small examples like the "deprecation" of net-tools ( ifconfig, netstat) in favor of less convenient ip and badly named ss support this worrying spiral.

Binary logs in systemd. How do you switch to a different runlevel? Serious administration of remote systems under Wayland? All of these are eroding the KISS principle upon which Linux was founded, leading to more and more obfuscation. Over time, the user has less "easy" ability to do things. You don't believe me? Well try configuring DNS manually on a modern distro. Let's see how it goes.

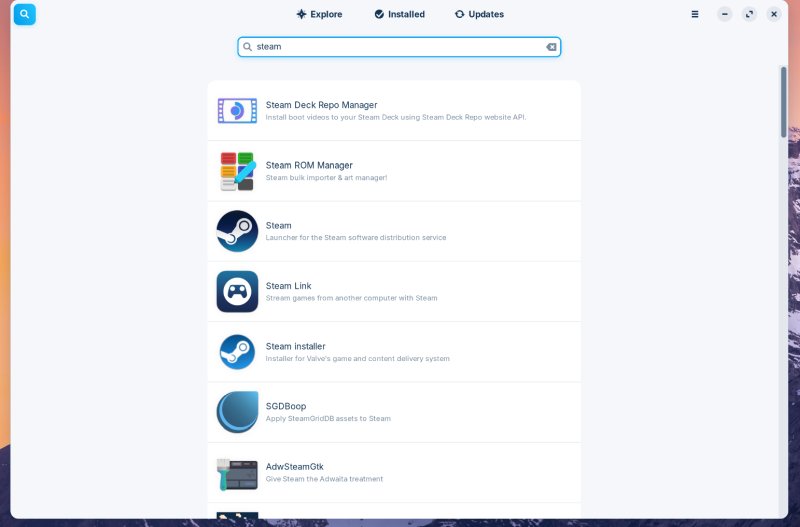

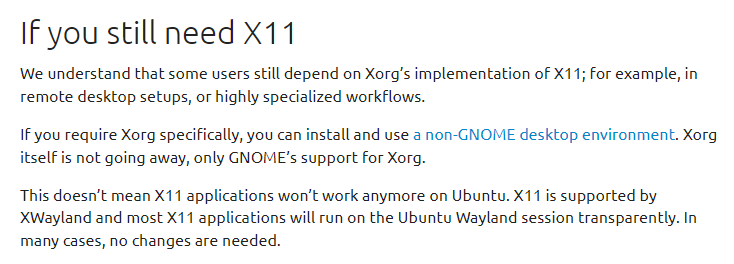

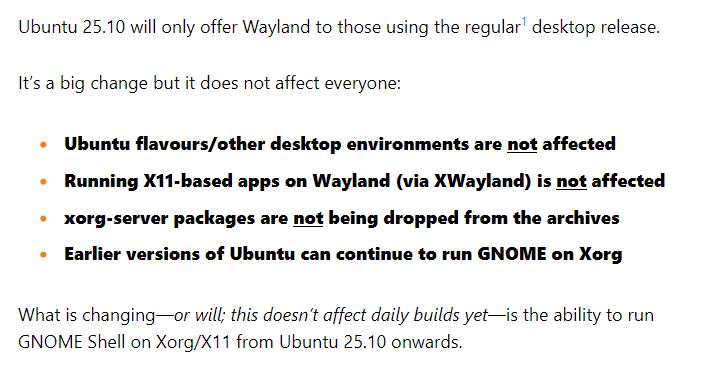

And unfortunately, Ubuntu directly enabled this by allowing bad choices outside its sphere to dominate. Now, most interestingly, Canonical is doing what Gnome wants. First, Gnome announced no more X11. Their agenda, fine whatever. Now, Ubuntu says no more default X11 session in 25.10. So yes, using beta Wayland, great. And then, all of a sudden, Kubuntu, which ought to be completely unconnected to Gnome, does the same thing, even though all the early messaging was: this does not affect other desktop environments!

In more detail. Look at the messaging, from above, Canonical says:

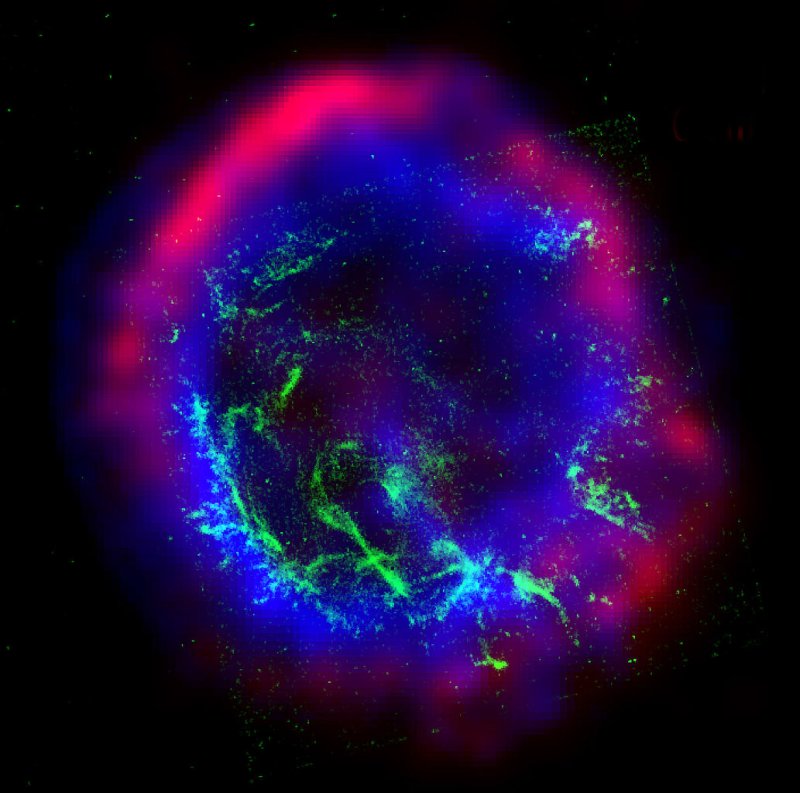

Screenshot, so there's no retrospective text change. And no, most applications will not run transparently. Even a basic tool like scrot cannot take screenshots, despite XWayland.

OMG! Ubuntu references this:

And a few days later, Kubuntu negates this. And with Wayland still beta quality!

Yes, you can install the X11 session, but why it's not there by default? Why make it hard for people to change if they need to. What's the purpose of this "default removal"? Why FORCE Wayland? What's the end goal? To make the users suffer with deficient technology to prove a point? To show how "new" technology is successful by the merit of no choice? Look how things escalated quickly.

Yes, KDE has committed to supporting X11 until Plasma 7. Now, this is an arbitrary date, totally unjustified, but at least there's a plan, and there's clear communication. You cannot fault KDE for how it messages its vision and work. They are doing a splendid job right there. Then again, this has no meaning if distros just do whatever they want.

Less freedom, less choice, the way forward

Linux is all about choice, they say. A fallacy. As time goes by, the Linux user has less and less freedom in doing things without thinking about hardware x software permutations they must satisfy. Gnome will soon be Wayland-only. Plasma will do the same in the future. For example, Plasma's System Monitor won't show some information if running under init (see the MX Linux review). Some software, like say snapd, is systemd-only.

This is EXACTLY, EXACTLY like Microsoft mandating TPM for Windows 11. Exactly. We call Microsoft's move asinine, anti-user, typical corpo snake oil, a crude attempt to make people buy new machines and run their latest failure. But in the Linux world? This is "progress" and "future", it seems.

No, the user has no real choice if they cannot write and compile software themselves. They are at the mercy of whatever various projects decide. And the cascade effect is really fast. Gnome is going all in on Wayland, Ubuntu follows obediently, Kubuntu, which is totally unconnected, follows suit. Yes, much choice, such freedom, wow. And what if tomorrow systemd is another must, and then Secure Boot, and then TPM? What then?

And I know what you will say, Dedo, you can still install X11. Well, in just a few days, we went from "all is good and nothing changes" to "X11 session is not installed, it's in the repo". Mathematically, it could take a few more days for X11 to being removed. And again, this is happening while the so-called successor technology is not yet 100% fit for purpose. The only way to get it used is to kill its rival. X11 must die, it seems, for Wayland to become success.

We've gone from Gnome doing its thing to soon, you won't have any choice. Remember Wayland is still a beta, and worse than even I expected. Look at my Plasma 6.4 review. Look at my benchmarks. Please do. Three months or so before Kubuntu 25.10 gets released, THIS is what you get:

Bad borders, fuzzy fonts, GPU spiking to 100% every 2-3 seconds in Wayland, on AMD integrated graphics.

X11 should not be an "optional extra" in the repos. It MUST be the default, because it works. If anything, Wayland should be the secondary choice. I don't mind for it to be there and improved. That's fine. Once it reaches maturity and quality, sure, we can talk about it.But that's the thing. It's not ready. It is not. And just as Windows 10-11 story is going to play itself out, with some small chance of Linux gaining whatever traction it can, it will all go to bits, because Wayland will simply ruin good usability.

We're now in a state that has never existed before in the Linux world. Never once before was the Linux user restricted in what they can do. The only limitation was their own choice. You could take whatever distro and boot it on any hardware platform you wanted. You were not limited by SOFTWARE.

But now we're entering the realm of software limitations in the Linux desktop world. And accelerating fast. Sure, various smaller projects won't follow, because they have no resources to do it any which way, but the big guys will do the corpo move.

And here, what really annoys me is, Ubuntu can make all the difference it wants. Ubuntu can simply say no, and stick to the principle of quality and provide the best experience to its users. Instead, once again, it will do as its "frenemy" does. But what if Ubuntu dropped Gnome? Overnight, whatever Canonical picks would be the next big things, and that's it. All other distros are tiny compared to Ubuntu, and so, by simple merit of whatever Ubuntu does will be the Linux desktop thing.

This brings me to my alternate reality question: What if Canonical selected Plasma for its desktop?

Conclusion

Quite often, I look back and reminisce about what could have happened. If Unity stayed. If Canonical chose Plasma or MATE or whatever. This can and should still happen. Plasma is the best desktop, hands down, and making it default on the most popular distro would and will revolutionize the Linux world. It should be the interface that Windows people see when they migrate over. Especially since SteamOS also uses Plasma behind the scenes.

Canonical still has a chance to do it. Will it happen? I don't know. But it would be nice if Ubuntu flexed its muscles and uses its influence to make a real change. Otherwise, it risks becoming a rather milquetoast, forgettable idea that will be, 20-30 years from now, remembered as one of the early pioneers of Linux. But it will be the likes of Steam carrying the torch forward.

Once, CentOS and openSUSE were mega names in the distro world. For many various reasons, they have lost their shine and impact. Ubuntu may yet fall prey to the same destiny. In my opinion, this hinges quite heavily on the architectural decisions Canonical makes. It's already set itself back immensely by deprecating Unity, by succumbing to the pressure over Upstart and Mir. It chose mediocre over right. Now, once again, it faces a major test, and it has a real chance to break free and make a stand. Ubuntu with Plasma. Wouldn't require much work, either, as Kubuntu is already a thing. Ah, a thing to see. Rant over, see ya.

Cheers.