Updated: July 7, 2025

Yo, remember when I did my Wayland vs X11 benchmarks on an AMD-powered machine, and then, I said that I could expand my testing but might not do it due to a lack of free time? Surprise! I uncovered a fresh bucket of free time, and so I decided to run another round of benchmarks. But check this out, a laptop with hybrid graphics, Nvidia plus Intel. Should be interesting!

If you missed the drama, I did my Plasma 6.4 review recently, and discovered a bunch of alarming things, including subpar Wayland performance, even on an idle desktop. I confirmed this in my GPU tests and also power & CPU tests. Now, I will (try to) repeat my checks and benchmarks on a different machine, one with Nvidia hardware. And then, expand on those. Begin to commence to start, we shall. After me.

Test conditions

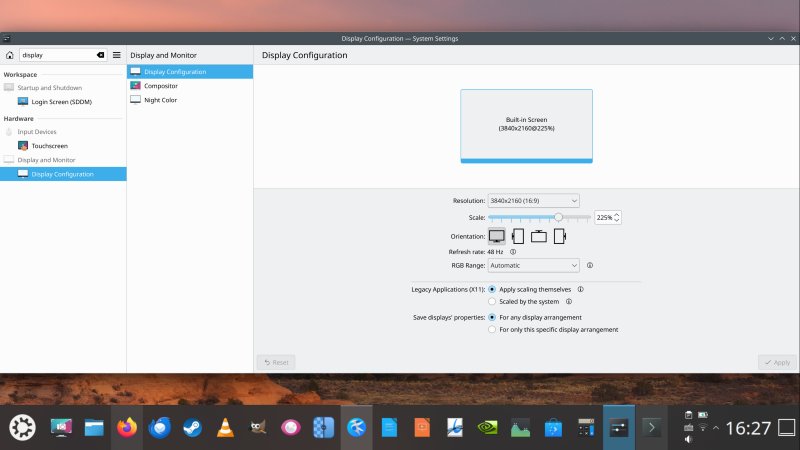

First, I'm going to run this experiment in Kubuntu 24.04, installed on my Lenovo Y50-70 laptop. This is not an identical test to the Plasma 6.4 one. But, again, it ought to be indicative. It will tell us the state of things in Kubuntu's current LTS, plus give us an opportunity to compare Plasma 5.X and Plasma 6.X, to some extent.

You may wonder about the hardware. The Y50-70 machine is 11 years old, and currently running Kubuntu 24.04. In fact, I've recently done a little experiment on this box, including an installation of MX Linux 23.6, and then a subsequent reinstallation of Kubuntu onto the box. I will share these stories slash reviews in the coming days. Stay tuned.

Now, you're going to say, that machine is too old or some such ... I beg to differ!

- It has an 8-core i7 processor, 16 GB of RAM and has recently been upgraded with modern SSD. This is more than enough for any trivial everyday task that people typically do.

- The machine has a solid Nvidia graphics card and 550.XX drivers. This card is good enough to play the likes of ArmA 3, Cities Skylines, BeamNG.drive, or Wreckfest in high detail without any stuttering. And that was even before the SSD upgrade!

- I can and have used DaVinci Resolve and Upscayl on this machine. Speed, elegance, you name it.

- The laptop can play 2160p 60FPS movies without any issues. Smoothly. And that's in VLC even with the integrated Intel card, let alone with the Nvidia card. I mean, a 2014 machine playing 4K content. What's not to like?

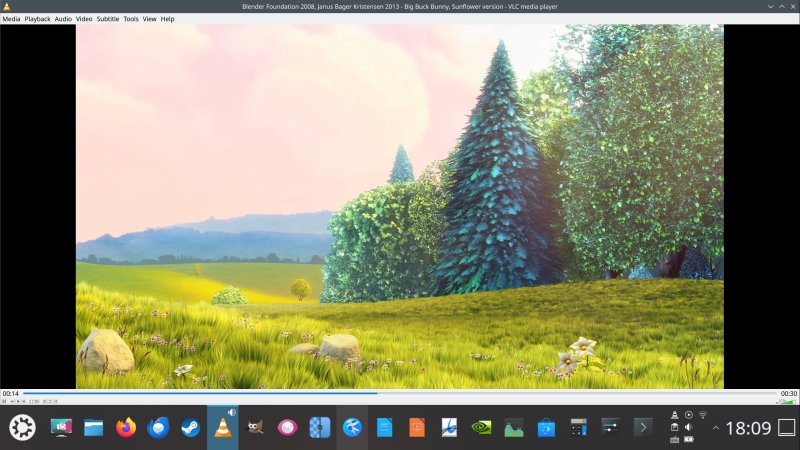

4K 60 FPS smooth playback, with either integrated or dedicated graphics card.

- Speaking of 4K, the laptop also has a proper 4K display!

This may be "age" old, but this is a perfectly capable machine for 99% modern daily tasks, including the hallowed buzzwordy 4K 60 FPS videos. And so, it will be our scapegoat for today.

Power utilization

As with the other machine, I ran powertop to see what gives. On idle.The difference is, in Plasma 5.27, which comes with Kubuntu 24.04, you don't have the Wayland power efficiency and color accuracy mode as I showed in the Plasma 6 review. So, one Wayland measurement. To make things more interesting, and since this is an older beast, I also decided to check how Plasma behaves with compositing turned off (in X11, as you can't do it in Wayland). To wit:

| Metric | Wayland | X11 compositing on | X11 compositing off |

| Battery drain (W) | 22.42 | 21.86 | 22.26 |

There was almost no difference in the power consumption on idle. With compositing off, I recorded a slightly higher power drainage than with compositing on. As I told you, this ain't rocket science, and battery measurements can't be inaccurate.

Percentage wise, the X11 (ON) was better 2% than X11 (OFF), and 2.5% better than Wayland, whereas the "bad" X11 session was 0.7% better than the Wayland one. For all practical purposes, the results are almost identical. The battery has 73% health, and it's the original unit, so please take this specific test with a big 11-year-old grain of salt.

CPU data

Vmstat results, as in the previous benchmark. Idle desktop results:

| Metric | Wayland | X11 compositing on | X11 compositing off |

| Average no. of tasks in the runqueue | 0.067 | 0.050 | 0.083 |

| Total tasks in the runqueue | 4 | 3 | 5 |

| Interrupts (in) | 311 | 268 | 199 |

| Context switches (cs) | 423 | 415 | 207 |

| Idle CPU % (id) | 99.18 | 99.48 | 99.57 |

The results here are similar to what we've seen on the system with AMD processor and graphics, with the caveat that I don't see the GPU spikes on this machine. Then again, different platform, different architecture, different Plasma version.

Wayland generated 56% more interrupts than X11 with compositing off, and 16% with it on. For context switches, the X11 (ON) and Wayland were almost identical. The CPU figures are also quite interesting. Utilization wise, Wayland tolled 0.82%, X11 (ON) 0.52% and X11 (OFF) 0.43%. Effectively, Wayland consumed almost double the CPU than its predecessor rival technology with the desktop just sitting there, doing naught.

Kernel profiling

Here, I must disappoint you a little bit. Due to perf's limited support for older hardware, I can only show some of the perf stat values. The others show as unsupported. Indeed, perf listed these:

<not supported> cycles

<not supported> instructions

<not supported> branches

<not supported> branch-misses

So my limited table then offers the following results for a 1m idle desktop:

| Metric | Wayland | X11 compositing on | X11 compositing off |

| CPU clock (ms) | ~496,000 | ~499,000 | ~487,000 |

| Context switches | 21,760 | 43.835/s | 17,023 | 34.133/s | 20,575 | 42.213/s |

| CPU migrations | 283 | 0.570/s | 157 | 0.315/s | 194 | 0.398/s |

| Page faults | 19,508 | 39.298/s | 1,683 | 3.375/s | 1,513 | 3.104/s |

From this limited set, it is somewhat hard to normalize the data. Wayland used a bit less CPU clock time than X11 (ON), but not X11 (OFF). It had the highest number of CPU migrations, page faults and context switches. But that's not enough to tell a good story.

Indeed, since the perf results are not meaningful, I decided to do something else - run a 4K video, in all three scenarios, and see what we get in the background. So, this is the opposite of idle, but should be interesting! Indeed, 4K playback is so hot right now, so, let's warm up the laptop case, shall we.

CPU, GPU load, power usage with 4K video playback

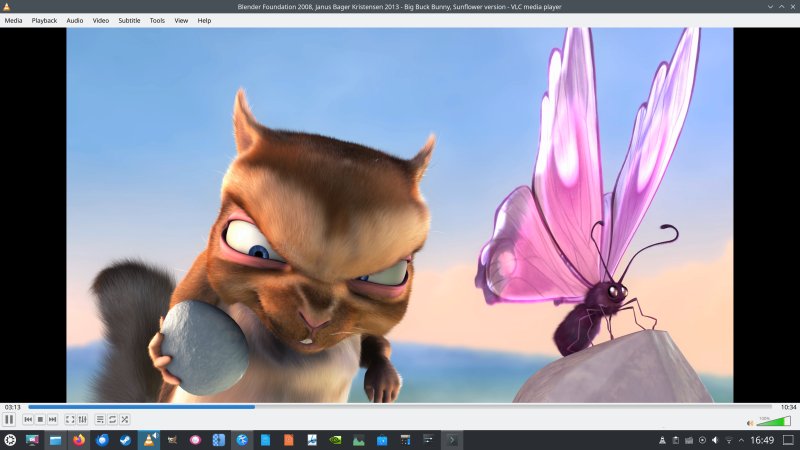

Anyhow, I decided to check what happens under some load, i.e., play a 4K video file. The test is as follows: VLC playing a (cut) clip of the first 30 seconds of the Blender-made Big Buck Bunny video (4K 60 FPS) with vmstat running in a Konsole window. I fired VLC from the command line and had it auto-quit once done.

vlc test.mp4 vlc://quit

I made sure that VLC was using hardware decoding (in all of the scenarios):

avcodec decoder: Using NVIDIA VDPAU Driver Shared Library 550.144.03 Mon Dec 30 17:08:34 UTC 2024 for hardware decoding

As it happens, I had some problems getting VLC to run correctly with the Nvidia card, under both X11 and Wayland, but more on that separately. To wit, the results while playing a 4K video:

| Metric | Wayland | X11 compositing on | X11 compositing off |

| Average no. of tasks in the runqueue | 0.51 | 0.09 | 0.26 |

| Total tasks in the runqueue | 18 | 3 | 9 |

| Interrupts (in) | 1507 | 1502 | 1191 |

| Context switches (cs) | 2192 | 4672 | 3333 |

| Idle CPU % (id) | 87.46 | 95.74 | 96.40 |

The results are consistent with everything we've seen so far, but with some differences, too. First, the leanest session is X11 with compositing off, using only 3.6% CPU. X11 with compositing off, 4.26%, a relative difference of 18%. This is big, because it's no longer just idle figures, this is system load. Imagine playing a 90m video during which your CPU uses 18% more cycles, and probably 18% more power.

The Wayland session used 12.54% CPU. This sounds as if it didn't fully and properly utilize the GPU, although VLC reported VDPAU being used for hardware decoding. This means that under Wayland, the 4K playback used 3x more CPU. It executed the same number of interrupts as the X11 (ON) session, and had the fewest context switches, which explain why it was also the most sluggish, as it would seem the CPU execution was geared toward "batch" computation (higher CPU) rather than interactive latency, which is what you see with context switches for GUI tasks.

GPU, power and temperature

Ah! I used powertop and nvidia-smi to see what gives. Unfortunately, nvidia-smi only reported data for the X11 session, so I can't really do a comparison there, although compositing on, off (like wax on, wax off, only better) is still a valid test unto itself.

| Metric | Wayland | X11 compositing on | X11 compositing off |

| Battery drain (W) | 58.1 | 53.0 | 50.7 |

| VLC (GPU memory MB) | ? | 409 | 409 |

| GPU temp (deg C) | ? | 51 | 51 |

| GPU util (%) | ? | 100 | 81 |

With X11, we can see, once again, that having compositing off makes a difference. Most importantly, power draw and GPU utilization. Specifically, X11 (ON) used 5% more power and 23% more GPU! So if anything, that's already telling.

Power usage, Wayland consumed about 9-15% more battery juice than X11. My earlier guess of about 18% more power wasn't that far off (considering the charger comes at a nice 135W rating). So yes, this does align also with the higher CPU utilization numbers we saw earlier. The differences aren't astronomical, but they are, still, indicative. And they align with what I've been saying for years and years.

However, I would still like to see GPU data, because in Wayland's defense, so to speak, it is possible that that VLC didn't fully or correctly utilize the card (even though it said so), and/or in this specific setup with Plasma 5.X and such, the necessary support is wonky, partial or missing. That on its own does not exonerate Wayland, not at all, quite the contrary, it proves the fact X11 shouldn't be deprecated and all that. But, but, when it comes to benchmarks, the baseline needs to be identical, for better or worse.

So please, for those reading this article, I fully stand behind idle power figures, idle CPU figures and all of the X11 findings with compositing on and off. The under-load CPU figures are most likely indicative, but without GPU data from nvidia-smi for Wayland, I must reserve the interim conclusions above. The same therefore applies to loaded system power readings. It is quite likely that the results are fully accurate, as we've seen similar data from an AMD-powered machine a few days ago. But I must try to be fair as much as possible.

WebGL performance

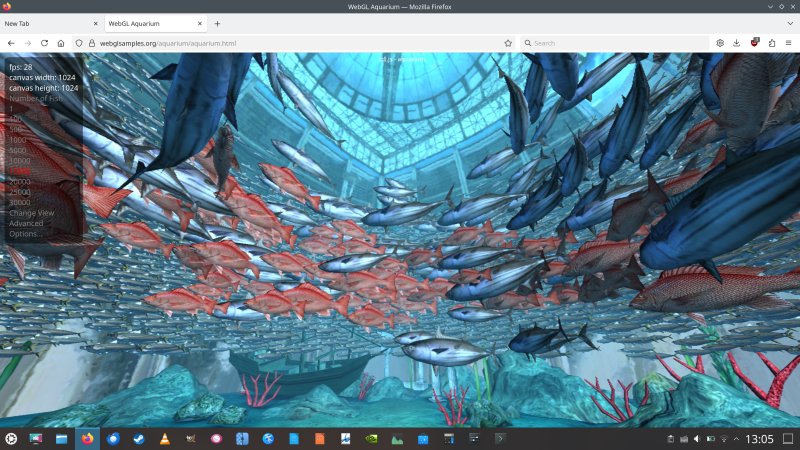

Oh, fishy fishy fishy fish, and it went wherever I did go! So, I decided to do even more testing. I fired up Firefox, and navigated to WebGL Aquarium, which lets you test how many frames per second (FPS) you get while running a canvas with a certain number of fish. You can go up as much as you like, to see where your graphics card chokes. A typical run looks like this:

And so, let's see FPS first, with the charger plugged in:

| FPS | Wayland | X11 compositing on | X11 compositing off |

| Fish count: 15,000 | 16 | 29 | 29 |

| Fish count: 20,000 | 12 | 23 | 23 |

| Fish count: 25,000 | 10 | 18 | 18 |

We can see a major difference between Wayland and X11. There was no difference in the FPS for X11 with or without compositing. Checking nvidia-smi (for X11), the card reported a maximum draw of 36W (it does 18W on battery). I launched the utility to see what happens at the 25,000 fish count, with the charger on, and then, without it. The results are based on what nvidia-smi and powertop reported.

| Metric | Wayland | X11 compositing on | X11 compositing off |

| Firefox (GPU memory MB) | ? | 296 | 296 |

| Xorg (GPU memory MB) | N/A | 387 | 311 |

| Wayland (GPU memory MB) | ? | N/A | N/A |

| GPU temp (deg C) | ? | 56 | 57 |

| GPU util (%) (charger on) | ? | 30-31 | 24-25 |

| Battery drain for 25,000 fish (W) | 52.5 | 54.5 | 57.4 |

| FPS for 25,000 fish on battery | 8-9 | 10 | 15 |

Superbly interesting results. Namely:

- Wayland used the least amount of power, but this could be probably due to the fact the graphics stack couldn't render any more fish at higher count. Even so, it was 4% better than X11 (ON) and 9% better than X11 (OFF). Indeed, the scenario with compositing off used the highest amount of power. This is different from what we've seen with the 4K playback, but so is the GPU utilization.

- The X11 (OFF) scenario also yielded the highest FPS count - 50% more than with compositing on, and even more than what Wayland did. This is a huge difference, and one in favor of not having compositing, period.

- Firefox memory usage with compositing on and off was identical. Xorg used 24% less GPU memory with compositing off.

- The GPU utilization was also the lowest with compositing off, 24-25% compared to 30-31% with it on. This translates into roughly 25% less utilization, correlative with the Xorg GPU memory. This is similar to the 18% CPU utilization difference that X11 (OFF) had on X11 (ON) in the 4K video playback test, but it does not correlate with the power draw. I think this warrants more checks.

- The card temperature was higher than while playing the 4K video, but this could also be due to ambient conditions, and the duration of the test, so take this particular set of numbers with a grain of salt.

General usability

Now, let's also talk about general behavior, Wayland vs X11. To wit:

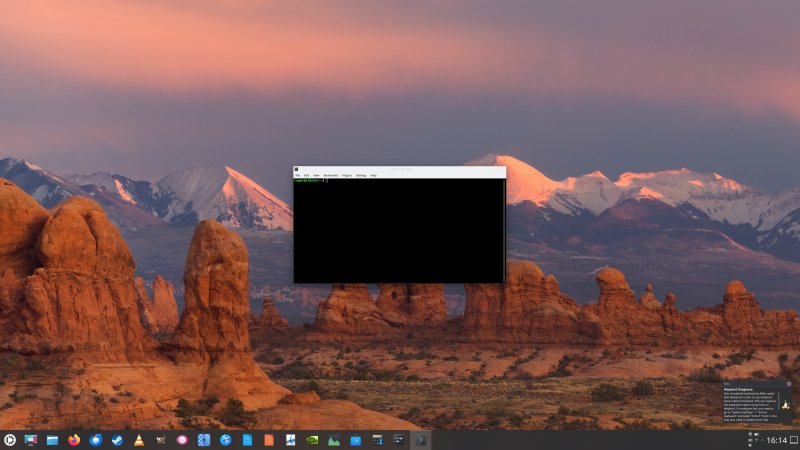

- The Wayland session isn't preinstalled in Kubuntu 24.04, you need to add it yourself. At least, this was the case in my scenario. Maybe because of the Nvidia card?

- The Wayland session usually takes longer to log in, about 4-5 seconds versus ~1 second for X11.

- In my testing, both the X11 login and Wayland login failed each once. In both scenarios, I ended up with a black desktop. With Wayland, this happened while running in PRIME Performance mode, which basically only uses the Nvidia card. With X11, this happened in the PRIME On-Demand mode. For X11, Ctrl + Alt + Del allowed me to log out again, and re-login. Wayland required a hard system reboot.

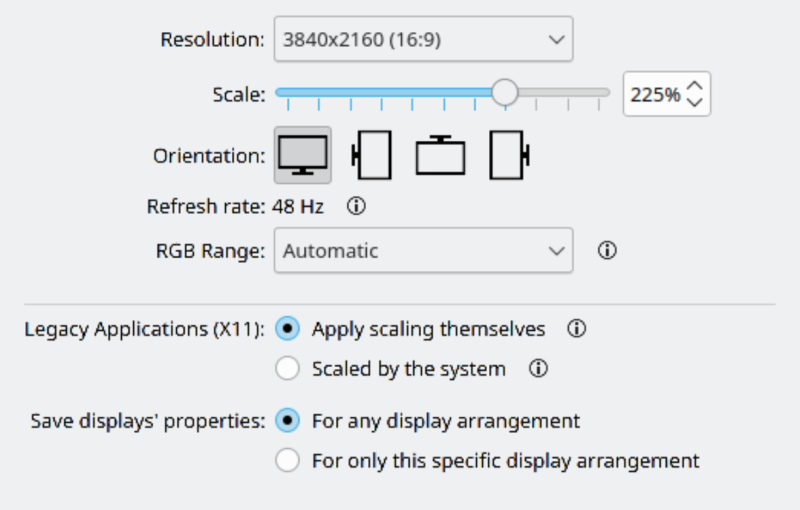

- The Wayland session never remembered my 225% desktop scaling factor. Every login, I had to reapply it.

For some reason, Wayland compounded X11 scaling factors and its own. Thus, the panel is huge, and so is the mouse cursor, which like I mentioned in the Plasma 6 story, cannot be captured by Spectacle in Wayland. also, notice the right border in Settings, but there ain't no left one.

- The fonts are blurry, but not everywhere. Kate renders fonts fine, for instance. Settings does not.

Click to enlarge.

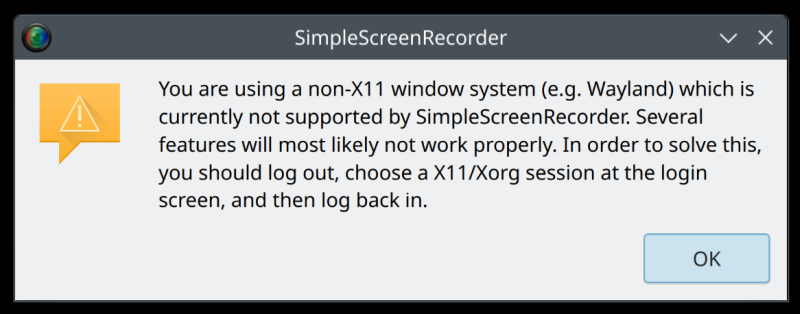

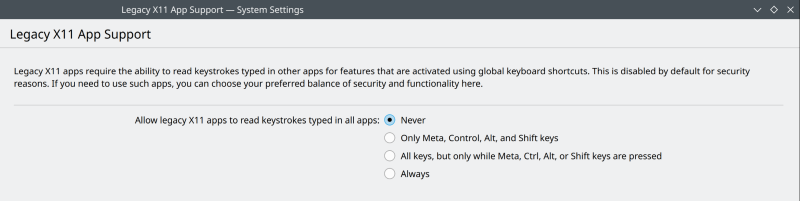

- I tried to record the desktop responsiveness (in Wayland) using Simple Screen Recorder, and it told me it requires X11. I tried regardless, and failed. One, the hotkeys didn't work. Two, the actual recording didn't work. Now, the reason why hotkeys don't work is would-be "security". For an obsessive reason, the one and only security concern that comes up in Wayland vs X11 is that a "rogue" X11 app could listen on your keys. Absolute nonsense for a million reasons. One, why stop at that? Why not have sophisticated malware that can do so much more? Two, if your system is infected, then game over, really. Three, don't install malicious apps, and problem solved. Four, I can write a keylogger in 6 minutes, it's super simple and does not require X11. In fact, anyone with some C knowledge and rudimentary understanding of the Linux device tree can do the same thing in about 6 minutes. Five, there hasn't been one single practical example of this "vulnerability" ever, and it keeps getting brought up as if it's the living plague of Linux.

Even the window borders look glitchy (top right side). Most programs "work" under Wayland, right.

Shakespeare wrote a comedy play about this. It's called: Much Ado about Nothing.

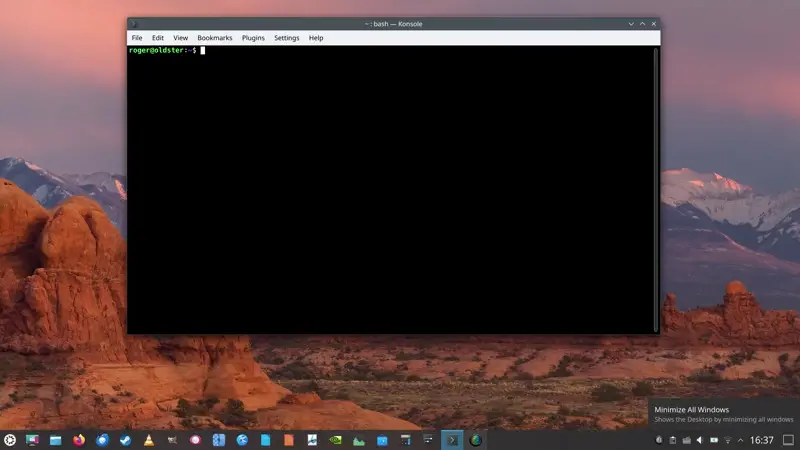

- To be fair, there are visual glitches under X11, too. For example, two Konsole windows, side by side:

- I did make a little video showing the following sequence: X11 session, Konsole window, minimize, maximize about one second apart, three repetitions. I did it once with compositing enabled, and once with compositing disabled. It shows how much snappier the OFF session is. The fact there's no such option for Wayland is a big downside for older, weaker systems. As I showed you in my ffmpeg guide and my GIF guide, I recorded two videos, slightly trimmed them, made them smaller, joined them, and converted them to the WebP format, as it supports a wider range of colors, and with a much smaller output. From about 11 MB to about 300 KB. Anyway, witness the glory of no compositing. If in doubt, it's the SECOND three min/max sequence in this animated image file:

- Performance wise, the X11 desktop was snappier and had fewer artifacts overall.

- VLC looks worse in the Wayland session, and the mouse cursor never fades - it obscures the playback, but as I noted earlier, Spectacle won't be able to capture it.

The menu is scaled, the bottom part of the UI is not. Only happens under Wayland.

And with an empty playback window ...

- Wayland never restored windows in their correct place; it always cascaded them in the dead center.

- Double scaling factor makes things odd:

System menu under Wayland, 225% desktop; the double scaling factor ... eh ... factors in somewhere.

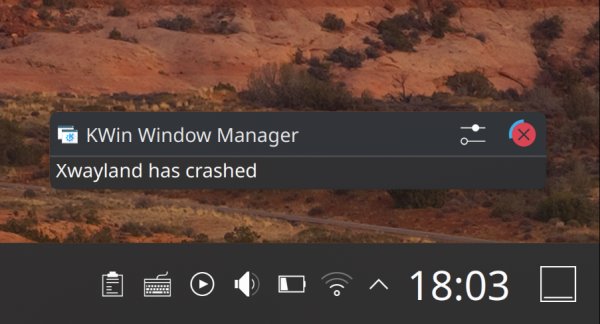

- You get this weird error when logging in:

During my testing, XWayland crashed once, and after that, the whole system froze, and I had to hard boot it. Not sure why, but could be perhaps related to VLC playback and VDPAU acceleration in the background.

An aside, hardware decoding problems

I had issues early on getting VLC to use hardware decoding, in both X11 AND Wayland. Error messages, missing shared libraries. I also tried the snap build, same problem. I wanted to try the Flatpak build, oops, unverified package.

First, the following message:

Failed to open VDPAU backend libvdpau_va_gl.so: cannot open shared object file: No such file or directory

Resolved by installing:

sudo apt install libvdpau-va-gl1

Then, the following message:

Failed setup for format vdpau: hwaccel initialisation returned error.

In VLC, you can change the video output (to use VDPAU, among other things), and also under Codecs, there's an option to tell the player to use hardware acceleration or not. With either the automatic settings or manual tweaks, this made no difference. In a few instances, VLC wouldn't even play.

What did help - the only thing that did help - was to change the Nvidia PRIME profile from On-Demand to Performance, reboot, and then, everything was peachy. But this effectively breaks the whole idea of having hybrid graphics on the laptop. Amazing, innit. The downsides are:

- Much higher idle power utilization.

- If you already configured your display scaling with a hybrid setup, once you switch to Performance, some of the elements will look weird. For example, even in an X11 session, Dolphin and every file dialog will show icons and menu elements without scaling, while the fonts will be scaled up, creating a jarring effect, similar to what you see in the VLC under Wayland screenshot.

- And your hardware isn't utilized as intended, cor!

The worst thing, this worked fine, until I guess some update messed something up. In 2025, this is an inexcusable scenario. Linux cannot win Windows people over by showing them nerdy vomit like VAAPI and VDPAU and missing libraries nonsense like it's 2003.

So, dear Linux nerds, if you want Linux to succeed, there can't be any tomfoolery like this. The user should never ever ever have to do anything to get their media players to just work and play content correctly utilizing the hardware. I don't approve of Windows, especially not Windows 11, but by and large, I never had to worry about this scenario there, and usually, Windows uses less battery on most laptops I tested. Not all, but most. So yeah, the year is 2025. This playback problem is 100x more important than the X11 vs Wayland drama.

Conclusion

There you go, another benchmark, if you will. This time, I tried Intel CPU, hybrid Intel + Nvidia graphics, 4K display, Kubuntu 24.04, Plasma 5.X. A somewhat different cocktail than what we had last time, but overall, the results are quite similar. Wayland work is simply less efficient than X11. For that matter, turning compositing off helps immensely on an old device, and this is an important element in the equation.

My 11-year-old laptop handled 4K 60FPS video and the WebGL simulation quite well, so that also tells you about the state of hardware, and what you can or cannot do with "modern" machines, and what qualifies as modern. Numbers wise, on idle, the Wayland session used almost the same amount of power as the X11. Under load, it used more power for the 4K playback, less for WebGL. CPU wise, it consumed a bit under 2x more on idle, and about 3-4x more when playing a 4K clip. There were also tons of glitches, font blurriness, and application support problems. There. Now, I may yet do more testing, perhaps even try Fedora and Gnome and compare the results to KDE neon on the AMD-powered laptop. I may also add these new load scenarios into the mix, too. But for now, that ought to be enough for one article. So, on that unhappy note, I bid thee farewell.

Cheers.